31 July 2019

Dr Peter Wootton-Beard RNutr: IBERS, Aberystwyth University.

- Effective nutrient management is an increasingly important operational process, needed for both economic and environmental reasons.

- A comprehensive plan, with close attention to detail is required to deal with complex, interacting factors. Small, incremental changes can add up to a larger overall impact.

- An effective, sustainable nutrient management plan depends upon accurate record keeping, regular reviews and the continual refinement of practices.

There is an inevitability when you are growing crops; it is a business of selling nutrients. Every time something is produced and leaves the field, it takes with it a whole range of nutrients in the form of leaves, fruit etc. That is of great benefit to whomever/whatever consumes it next, but it leaves the producer with a shortfall to make up. In the end, the success or failure of a farm business can come down to how efficiently a producer can convert nutrients (in the soil/fertilisers) into different nutrients that they can sell. This is the simple reality which defines the process of nutrient management. The most effective plan in theory alone, would simply replace the exact amount of nutrients that left the business as produce. This is however, hard to quantify, and even if it were easier, the dynamics of nutrient use, loss, and cycling make this simple equation far more complicated. On average, a farm business is doing well if it achieves 50% efficiency in terms of nutrient inputs.

The aim of this article is therefore, to examine the detail of nutrient management planning and provide guidance on the practical factors which offer the best opportunity to reduce nutrients lost to the system, improve efficiency and drive farm business profitability. There is a careful balancing act involving soil, climate, crop, timing, and location required, and achieving success demands attention to detail.

Nutrient management has been thrust into the limelight following changes in legislation such as the EC nitrates directive (91/676/EEC) enacted in Wales through principles such as nitrate vulnerable zones (NVZs). The cost of fertilisers (both financially and environmentally) is also rising due to greater global demand, leading producers to aim for optimal efficiency (the proportion of nutrients applied across all inputs, which is recovered in farm produce), and more closed-loop approaches. Farming activities have resulted in big changes to nitrogen and phosphorus cycles, with particular impacts of water and air pollution. There are therefore, three central elements of an optimised production system which make the best use of available nutrients. These are: achieving optimal yield and quality, nutrient budgeting, and minimising losses to the environment.

The primary factor limiting growth (PFLG) / Liebig’s law of the minimum

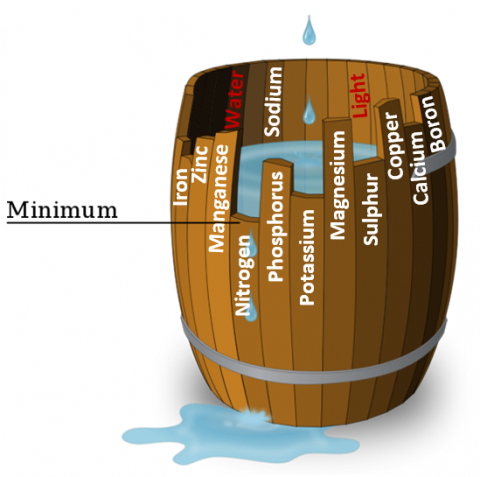

Before the nutrient management planning begins, there is an important concept to keep in mind – the primary factor limiting growth (PFLG). It is sometimes called Liebig’s law of the minimum, or Liebig’s Barrel (See image below). It shows that whilst there are lots of factors which are essential for plant growth, the one which is least abundant becomes the PFLG. This is a crucial concept, because until the PFLG is corrected, it doesn’t matter how much there is of another factor, growth will still not increase. For example, if the PFLG is magnesium, then it doesn’t matter how much nitrogen is applied, no yield improvement will be achieved, until the magnesium deficiency is corrected. An effective nutrient management plan therefore, depends upon the PFLG being something which is known, and within the control of the land manager. It is possible for the PFLG to be physical (i.e. weather/soil structure/light/water etc.) as well as biological (nutrients etc.).

Liebig’s Barrel: An illustration of the primary factor limiting growth (PFLG). It is the least abundant nutrient which limits growth (stops the barrel from being completely filled). In this example, Nitrogen is the PFLG.

Knowing your soil (or growing medium) inside out and back to front

At the heart of any strategy to maximise the efficient use of nutrients is a comprehensive understanding of the soil (or growing medium). A doctor attending a patient, can only know they are getting better, if a measure of how unwell they were in the first place is available for comparison. Think then, of soil analysis as the (resting) heart rate and blood pressure of a crop production business.

This understanding of the soil comes primarily from regular testing, which is the only effective method of assessment. Relying on visual cues or productivity are highly subjective, and can often be misleading. The more information that is collected about the soils of a particular farm business, the greater the opportunity to apply the principles of precision agriculture. To be most effective, soil testing must be representative of the spatial variation in soil types, depths, positions etc., as well as representing changes which occur over time under different weather conditions and stages of crop growth. It is necessary therefore, to develop a robust and reproducible protocol for soil sampling and testing. Depending on the soil testing service used, this method may be pre-prescribed, although in general it is likely to be a form of random sampling, where the land is divided into distinct areas, and then samples are taken randomly within each area. This could mean a large number of distinct ‘areas’, particularly as a better understanding of the variation in soil parameters within a farm business is developed. It may eventually be possible to construct a detailed map of variation over time, which, together with advanced nutrient spreading machinery, could lead to the ability to deliver an amount of nutrient that is specific to each identified area.

In the quest for economical use of nutrient inputs, you cannot know too much about your soil. Macronutrients (Nitrogen, Phosphorus and Potassium) are almost a given, but mineral levels, organic matter content, and pH will also affect the potential of the soil. Any one of these factors could prove to be the PFLG, and therefore, in this sense, knowledge is power. The availability of nutrients, even when they are present is subsequently affected by additional factors such as water holding capacity/drainage, soil health (in terms of biodiversity) and compaction.

Improving soil management

Assuming the cost of doing so would not be too high, one of the most efficient ways to achieve gains in nutrient efficiency is to maximise existing resources. Once knowledge of the soil (or growing medium) has been acquired, the next step is address any deficiencies. The first assumption might be to add more of whatever is lacking, but that would be to underestimate the benefits of maximising the potential of what is already there, first.

The availability of nutrients, and the ability of a plant to access them, is fundamentally linked to the health of the soil. As well as a place for the plant to anchor itself, the soil environment is a complex site of biological and chemical interactions, which are highly sensitive to change. Whilst there are a range of costs involved in correcting sub-optimal soil conditions, the benefits are often immediate and long-lasting, making them more cost effective (not to mention better environmentally). The aim is to enhance populations of soil organisms, from earthworms through to microscopic bacteria and fungi, by ensuring no net loss of soil organic matter, reducing tillage, the use of cover crops and a crop rotation (and therefore crop residues which are returned to the soil), avoiding/reducing compaction, and reducing use of pesticides and inorganic fertilisers.

Factoring in location, geography and topology (and anything out of the ordinary)

There are no standard protocols here, and the knowledge of the land manager is far more important than anything science can offer. This is the point at which decisions are made for how best practice should be applied to the specific farm business, and it is vital to consider each on its merits. In a practical sense, and at a basic level, this probably looks like a field map containing specifications for each growing area such as land use, size, soil type etc. However, when this information is linked with historical records relating to each area, it is transformed. It becomes a database of information, upon which considered decisions can be based. Perhaps the most vital information to include are ‘things which are out of the ordinary’. If ‘ordinary’ is considered to be flat land, which is optimal in every sense, the important things to consider become anything in a specific location which deviates from that, and crucially, how it deviates. This could include information such as sloping land, wind, proximity to water courses (or NVZs), presence of trees, changes in altitude, changes in soil conditions etc. These pieces of information need to be matched to factors such as soil fertility, yield and crop sequence to allow patterns to emerge. This will tell the land manager how those unusual factors affect the crop, and what adjustments may need to be made. Increasingly this process is being supported by the development of remote sensing technologies such as geospatial mapping.

The landscape (above) may throw up some ‘out of the ordinary’ factors compared with others (below) which could result in adjustments to the nutrient regime or yield predictions.

Optimising the crop sequence

The need for crop rotation in production is unlikely to come as a surprise. Neither can it be considered ‘cutting edge’ science. However, there are layers of detail which can help land managers to develop a crop sequence with multiple benefits. The first question to ask is; for how many years is the crop sequence known? This information is important, because it represents the most accurate record of inputs and losses of nutrients to the system. As has been discussed, saleable produce is nutrients that are leaving the field. However, plant biomass which is unsaleable, in the form of crop residues, are nutrients which can be reclaimed. Considering these losses and gains over time, provides insight which allows for more detailed crop sequence planning. Knowledge of the crop sequence over a 5-10 year period could offer the potential for each crop that followed to redress and re-balance nutrient deficits through the residues it left behind. The most common example is for a nitrogen hungry crop to follow a nitrogen fixing crop, but by understanding the nutrient composition of crop residues (e.g. through stalk tests), and the nutrient demands of different crops, the patterns can become increasingly sophisticated. These can be added to other benefits of rotation such as reduced pest and disease burden, to ultimately reduce reliance on costly external inputs.

The incorporation of crop residues into soils will interact with the tillage regime, and a cost-benefit analysis for different methods may be necessary to avoid damage to soil health which might off-set the benefits. Thoughtful crop rotation and residue management also ensures a diverse range of organic matter is added to the soil over time, which is likely to increase soil biodiversity. Businesses producing a limited range of crops are likely to find this process harder to fine-tune, but even in these cases, crop sequencing can be considered in blocks of time, where the maximum opportunity for nutrient re-capture is maximised.

Improving the accuracy of yield predictions.

The information contained in the previous sections all helps to determine the baseline nutrient potential. Once that has been established, then the most accurate prediction of yield provides the final part of the nutrient equation. Yield prediction in this sense, is about understanding the gap between baseline nutrient availability, and nutrient requirements to achieve the predicted yield. Knowing this amount allows for a more precise application of nutrients from other sources. It is a combination of genetics (choice of variety), conditions and management practices.

There are a wide range of factors affecting the predictability of yield, but improving its accuracy has knock-on effects on the efficiency of nutrient management. On a global/national scale, crop yields are predicted using statistical models which account for broad patterns in key factors such as climate. However, these models are too broad to be applied at farm-scale. Therefore, each land manager needs to develop their own ‘prediction model’ based on the factors which matter most to them. Its success is based around the gathering of data, but relies on attention to detail and accurate record keeping. It brings together all of the existing information, to make adjustments to the maximum theoretical yield, provided by the seed supplier.

Data can be gathered from a range of sources, and every record helps to build a clearer picture of the overall likelihood of achieving a given yield. Accurate historical records for each variety in each locality are also vital tools to aid prediction, and they can also give an indication of the average losses/gains associated with weather conditions, pest and disease outbreaks, or changes in management practices. These records take time to build-up to a useful level, since no two years are the same, but in time, the benefits they offer can pay dividends. Yield can also be estimated using small trial plots (of at least 1m × 1m), where seed is sown and yield measured accurately. The average of at least 5 plots should be used, and the process needs to be repeated for each different area/soil type. This is particularly useful for assessing the potential of new/different varieties to provide a better yield because of their ability to better withstand the conditions on a specific site.

The ability to collect precise data into the future, will be supported by the rapid development of sensor and remote sensing technologies. Sensors will be available on farm machinery, in the soil, and even ‘tattoos’ on the plants themselves, returning highly accurate, real-time data about the situation in any given location. This data will be combined with lab-based optimal thresholds to give very precise predicted yields. There are already a number of remote sensing products that use image-based techniques (using satellite/drone images) which are able to detect poor growth caused by water, nutrient, disease or insect related stress. They use factors such as subtle variations in leaf colour, which visual inspection may not reveal. All of these data are fed to computer systems which create easy to use maps/graphs, that place powerful decision making tools at the farm business manager’s fingertips. These technologies will inevitably come at a cost, but the efficiencies they provide may be cost-effective at the right scale of production.

The ability to fine-tune nutrient management plans is enhanced by data collection and analysis

Applying nutrients

Having determined the gap between the baseline potential and the amount required to achieve an accurate predicted yield, attention turns to the source and form of nutrients which are applied to ‘fill the gap’.

Sources and forms

Efficient use of nutrients is always a question of cost vs. benefit, but in general, nutrients that can be reclaimed from operations on-farm are preferable to ‘buying in’. This may be in the form of manures, the use of nutrient fixing crops (e.g. legumes), crop residues returned to the soil, or a combination of the above. The challenge is to understand the value of these nutrient sources, and how much they will contribute – as well as whether or not this is consistent throughout the seasons, and from year to year. For manures, this will depend on the effectiveness of manure management practices such as fertility testing, storage and application. The rate at which leguminous crops fix nitrogen varies according to the crop, the soil conditions and their bacterial associations, but can be estimated through soil sampling before, during and after they are grown. Crop residues can be assessed for their nutrient content, or their value can be assessed through repeat soil sampling after incorporation. Although highly variable, they are a significant nutrient resource.

Rate

The availability of nutrients, and the clear understanding of their benefits has led, in many cases to overuse. In Europe for example, the average nitrogen use efficiency is below 50% (worse in livestock systems). Despite this, Europe is actually one of the most efficient continents.

In terms of application rate, the most important factors associated with minimising losses are; calculating the optimum rate based on accurate yield predictions, adjusting for changes in requirements through crop growth stages, and the fine-tuning of application rates based on soil, weather and other management practices (e.g. tillage regime, residue incorporation etc.). Recommendations are available in the UK through RB209.

Timing

The timing of nutrient application (for manures and fertilisers) is critical. Recommended timings are available for all commercial products, but factors such as soil temperature (affecting the speed of breakdown), tillage practices (how quickly will it be incorporated into the soil) and the location of manure storage (in terms of proximity to its use) should also be factored in. Rainfall patterns also have an important impact on application timing, contributing significantly to losses through run-off which may result in accidental pollution. This is made worse on sloping sites. Timing may also depend on the availability of labour, equipment and the harvesting schedule. It is recommended to avoid unnecessary applications in spring and autumn when crops are not growing as strongly. Timing fertiliser applications during periods of rapid growth will result in more efficient use.

Method

There is a general choice to be made between precise and imprecise – namely surface application, and injection. Whilst it may be more costly to use precision techniques, the risk of losses resulting from poor weather, particularly on sloping sites may alter the economics in favour. The calibration of spreaders, and the use of correct nozzles etc. also contribute significantly to achieving efficiency. There will also be an interaction between nutrient application and irrigation, and as a general principle, minimal irrigation should be used immediately after application of fertilisers.

Record keeping, evaluation and refinement

Information provides the basis for decision making, and will reduce nutrient losses. Record keeping (or other data gathering) therefore, becomes the currency of efficiency. It is important that records not only reflect what was planned, but also any deviations from that. The actual decisions taken and the reasons for them, together with records of unusual factors such as extreme weather events, pest/disease outbreaks, or specific variety performance, are vital to record. Taking time to accurately evaluate processes, review practices and consider any potential nutrient carryover to the following season, can all add up to cost, efficiency, and environmental benefits when they are accumulated over a number of years.

Summary

Effective nutrient management boils down to the application of the correct nutrients in the correct amounts, and the correct times, and there are significant gains to be achieved by simply adhering to these principles. However, in order to manage nutrients in a truly precise way, attention to detail is required. This begins with a clear understanding of the specific farm context, is enhanced by gathering information, and optimised through maximising on-farm nutrient resources. Each of these elements closes the gap between nutrient requirements and resources, minimising the need to purchase additional nutrient products, but also minimising environmental impact.